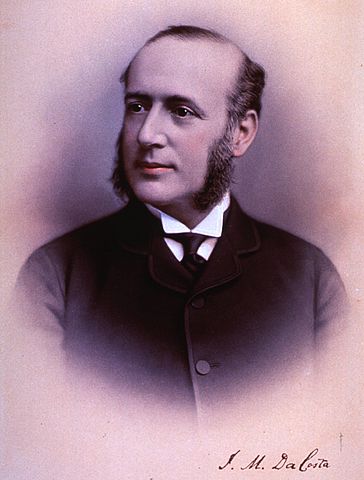

It has been known by many names over the course of history, the earliest recorded identification of emotional or psychological disorders affecting a man’s combat ability by a medical source comes from the U.S. Civil War, when Union Surgeon Dr. Jacob Da Costa, called the men who reported to sick call, the Army’s ER for illness, complaining of nervous discomfort, shortness of breath. Unofficially it was referred to as “Da Costa’s Syndrome” but also was called “nostalgia” and “irritable heart”, whatever the early medical terms used, it was attempting to describe the effects of violent combat and its effects on the men who were fighting in it. Although first identified by medical staff as an illness, no official or systematic study was conducted by any medial authority, north or south, into the causes and possible treatments of the malady.

It has been known by many names over the course of history, the earliest recorded identification of emotional or psychological disorders affecting a man’s combat ability by a medical source comes from the U.S. Civil War, when Union Surgeon Dr. Jacob Da Costa, called the men who reported to sick call, the Army’s ER for illness, complaining of nervous discomfort, shortness of breath. Unofficially it was referred to as “Da Costa’s Syndrome” but also was called “nostalgia” and “irritable heart”, whatever the early medical terms used, it was attempting to describe the effects of violent combat and its effects on the men who were fighting in it. Although first identified by medical staff as an illness, no official or systematic study was conducted by any medial authority, north or south, into the causes and possible treatments of the malady.

The next reference to psychological illness came in 1917 for the United States, in World War I, the Spanish-American War of 1898 did not have the intense close-order fighting of the Civil War and the fighting lasted only some eight months and many of the battles were sharp but short. Many of the symptoms first experienced in the U.S. Civil War arose again with the misery of life in the frequently flooded filthy trenches of north-western France. Artillery bombardments, short and inaccurate in 1865, now became days and even weeks long torrent of an accurate hurricane of steel and high explosives. Along with the other new invention of the Great War, accurate and sustained machine gun fire, and the inability to break the logjam of positional warfare until the last months of the war in 1918, the weeks and months in the same trench were the perfect laboratory for all kinds of illnesses, physical as well as psychological.

Called “shell shock” by the Doughboys of the U.S. Expeditionary Force, the existing treatment was to separate those affected from the rest of the unit, lest they “infect” them with the malady and were sent back to the rear for “rest’. Again, no official, organized effort was conducted by either the U.S. Army medical authorities or civilian medical organizations to understand the causes and possible treatment for “shell shock” until well after the war. As U.S. participation lasted for less than two years, compared to the other major combatants in western France: Germany, France and Great Britain, rest and quiet was the established cure. One early method of “treatment” (1915), used by the British, if no physical wound caused the man to report an injury, he was labeled “S” for “sickness” and denied the privilege of wearing a wound stripe on his sleeve and loss of his pension. Although places were established in aid stations near the front for men reporting nervous affects, these were temporary in nature. The usual treatment was to rest for several hours or a day or two of sleep and then they were returned to their units.

On 7 December 1941 the United States once again found itself involved in a world war. The Japanese Imperial Navy’s attack on the U.S. Navy base on Oahu was to catapult the U.S. military in the most expensive and deadly war to date. With the U.S. Army practice of replacing casualties in its field divisions as they occurred, these divisions spent a far longer time in the front line than any of the Allied combatants, some 80 days, on average, compared to the British, who rotated their units into and out of the front every two weeks. This sustained action, with no relief for a prolonged period of time was one of the chief sources of what became known as “combat fatigue”.

For the first time, the number of non-physical combat injuries arose to unprecedented levels and a solution had to be found before large segments of the field fighting force became incapacitated. In 1943 Army Captain Frederick Hanson was assigned to investigate the many combat fatigue soldiers after the U.S. defeat at Kasserine Pass in North Africa. His revolutionary treatment did not involve lengthy separation for “rest”, removal from the combat zone or even discharge, but treatment, counseling and induced sleep all within the sound of artillery of the front lines. His success, recovery rates as high as 70 percent in some cases, was a signal development in the treatment, although not the panacea it was hoped for.

A major difficulty in treating the illness, no matter or temporary or permanent, was the reluctance of the men suffering from it to bring it to the attention of their superiors fearing being seen as a coward until they either broke down or fled from the field. The public stigma of the coward was unfortunately reinforced by no less than U.S. General George S. Patton, when, during the campaign on the Italian island of Sicily in 1943, he slapped not one but two soldiers suffering from what was then described as combat fatigue.

One drastic solution to the finding a way out from the stress of combat for many men was desertion. Although punishable in almost all combatants in World War II by death, some 40,000 U.S. soldiers deserted and the far smaller British army lost some 100,000 men to desertion and German Army documents refer to some 300,000 soldaten lost to desertion.

By the first decade of the 21st century, similar prolonged combat assignments in the field has resulted in combat fatigue, although now identified as post-traumatic stress disorder or PTSD, the treatments developed during World War II laid the foundation for effective treatment for the care of the invisible wounds of war.

“The Breaking Point” by Duane Schultz, WWII History magazine, June 2013, and “Between Flesh and Steel: A History of Military Medicine from the Middle Ages to the War in Afghanistan”, by Richard A. Gabriel, Potomac Books, 2013 were sources for this article.